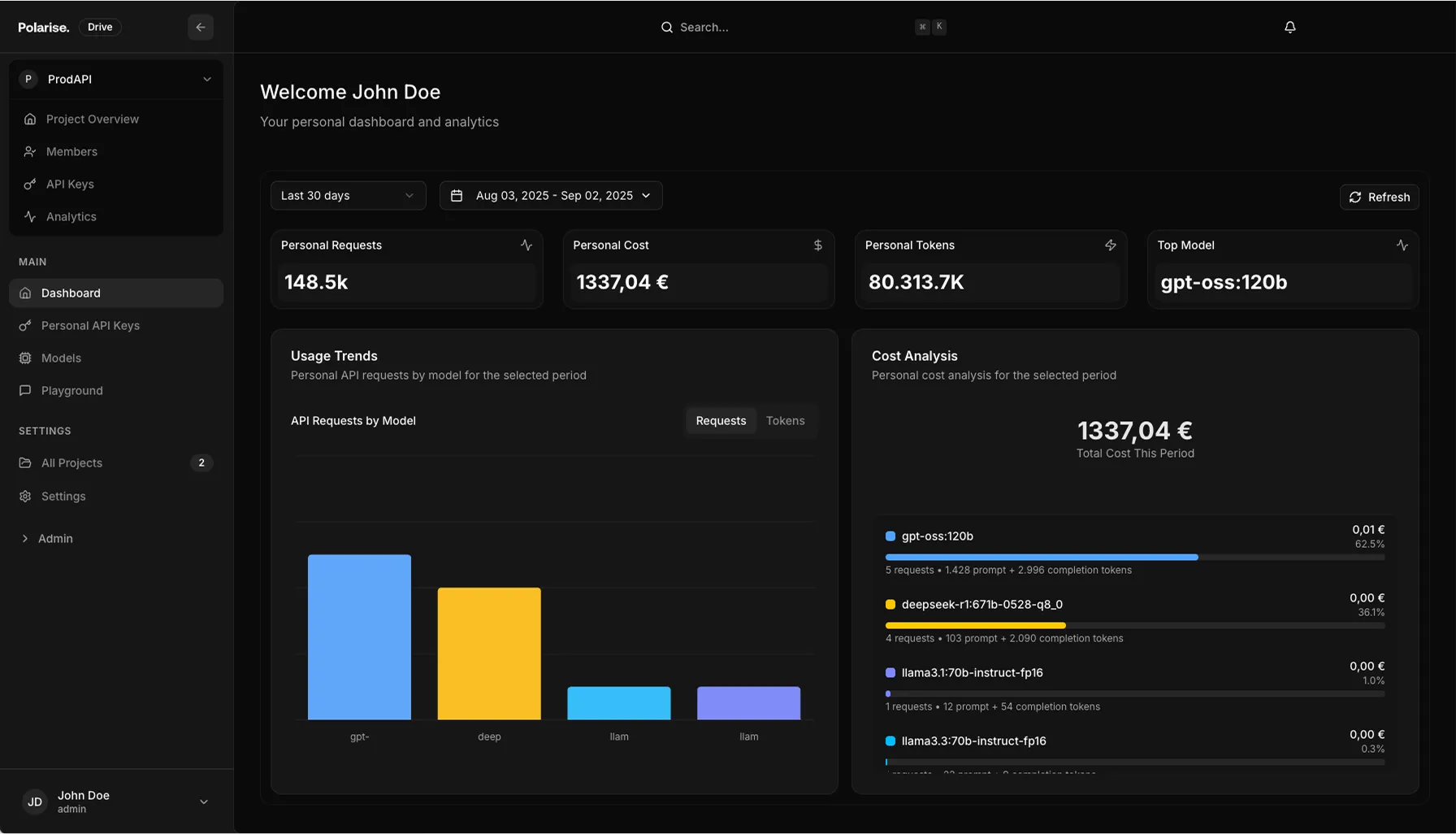

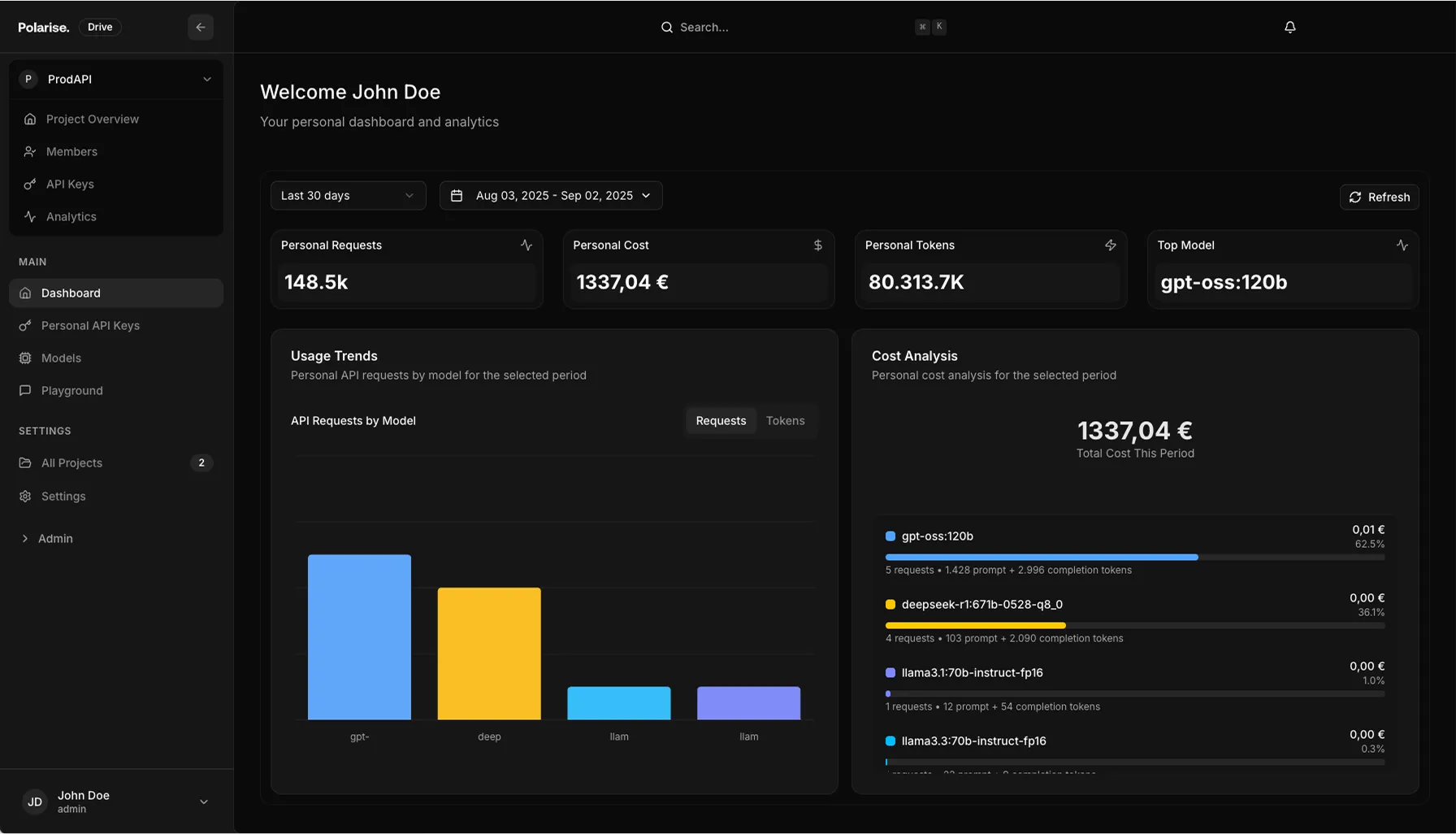

Run Your Favorite AI Models Without Operations Effort

Drive offers seamless scalability and optimized pricing for all your generative AI workloads.

Drive offers seamless scalability and optimized pricing for all your generative AI workloads.

Test, Train, Deploy - with the most comprehensive suite of tools for GenAI.

Run models through our API for consistent performance and flexible capacity. Seamlessly scale from prototype to production, handling large scale workloads.

Choose from a comprehensive range of top-tier models, including Deepseek, Llama, Flux, Stable Diffusion, Mistral, and Qwen. Leverage support for text, vision, image generation, and fine-tuning.

Create sophisticated applications and AI agents with native function calling tools, structured JSON outputs, and comprehensive safety guardrails for robust production deployment.

Fine-tune models to your specific needs with support for both LoRA and full fine-tuning approaches. Reach out to us for per-token pricing on custom model hosting.

Access powerful embedding models and PGVector-enabled PostgreSQL for vector storage to build your retrieval-augmented generation (RAG) systems. Start with the core components you need for RAG.

Access state-of-the-art AI models across multiple categories - from text generation to image creation, speech synthesis, and more. If you don't see the model you need, reach out to us and we'll add it.

Our platform is engineered for superior performance and scalability, delivering benchmark-backed results.

We're always striving to be more cost-effective than competitors while being sovereign and GDPR compliant.

Scale while keeping consistent performance, supporting any workload size. Scale seamlessly as your needs grow.

Access 60+ Open Source models spanning LLMs, vision, image generation, and embeddings, with new additions monthly.

Leverage NVIDIA NIM for optimized inference, delivering lightning-fast performance and low-latency responses for enterprise AI workloads. Benefit from GPU-accelerated deployment and seamless scaling.

Integrate NVIDIA NEMO Guardrails to enforce safety, security, and compliance in your AI applications. Easily add robust content filtering, data privacy, and policy controls to any workflow.

Deploy state-of-the-art models with confidence using NVIDIA’s enterprise-grade tools. Enjoy end-to-end support for model management, monitoring, and continuous updates through the NIM and NEMO platforms.

Our API is designed for ease of use. Get started quickly with familiar integration.

Our Drive services offer a comprehensive suite of tools for GenAI, including inference, batch processing, image generation, and fine-tuning.

Utilize our hosted open-source models to achieve superior inference results compared to proprietary APIs.

Process multiple requests asynchronously with our high-throughput Batch API.

Access top image generation models through a single platform that scales with your needs.

Transform open-source models into specialized AI solutions using our comprehensive fine-tuning platform.

Book a personal demo to explore the Polarise Drive platform.